Model Performance

1 Why do model evaluation?

2 How to evaluate a model?

What data to evaluate

What method to use(实验评估方法/Model Selection)

Cross Validation

K-fold Cross Validation

What metrics to compare(性能度量performance measure)

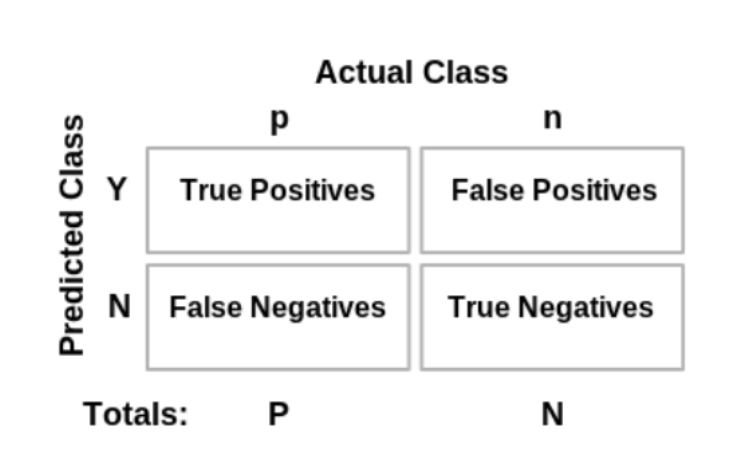

Classification-Confusion matrix

Classification-ROC

Classification-AUC

Regression

3 Failures Analysis

Machine Learning End-to-End Pipeline

Business Design

Data acquisition

Data preparation

Training & Validation

Testing Evaluation

Deployment& Inference

Last updated